CamIO (short for “Camera Input-Output”) is a system that enables blind and visually impaired individuals to interact with physical objects (such as documents, maps, devices, and 3D models) by providing real-time audio feedback based on the location that they are touching.

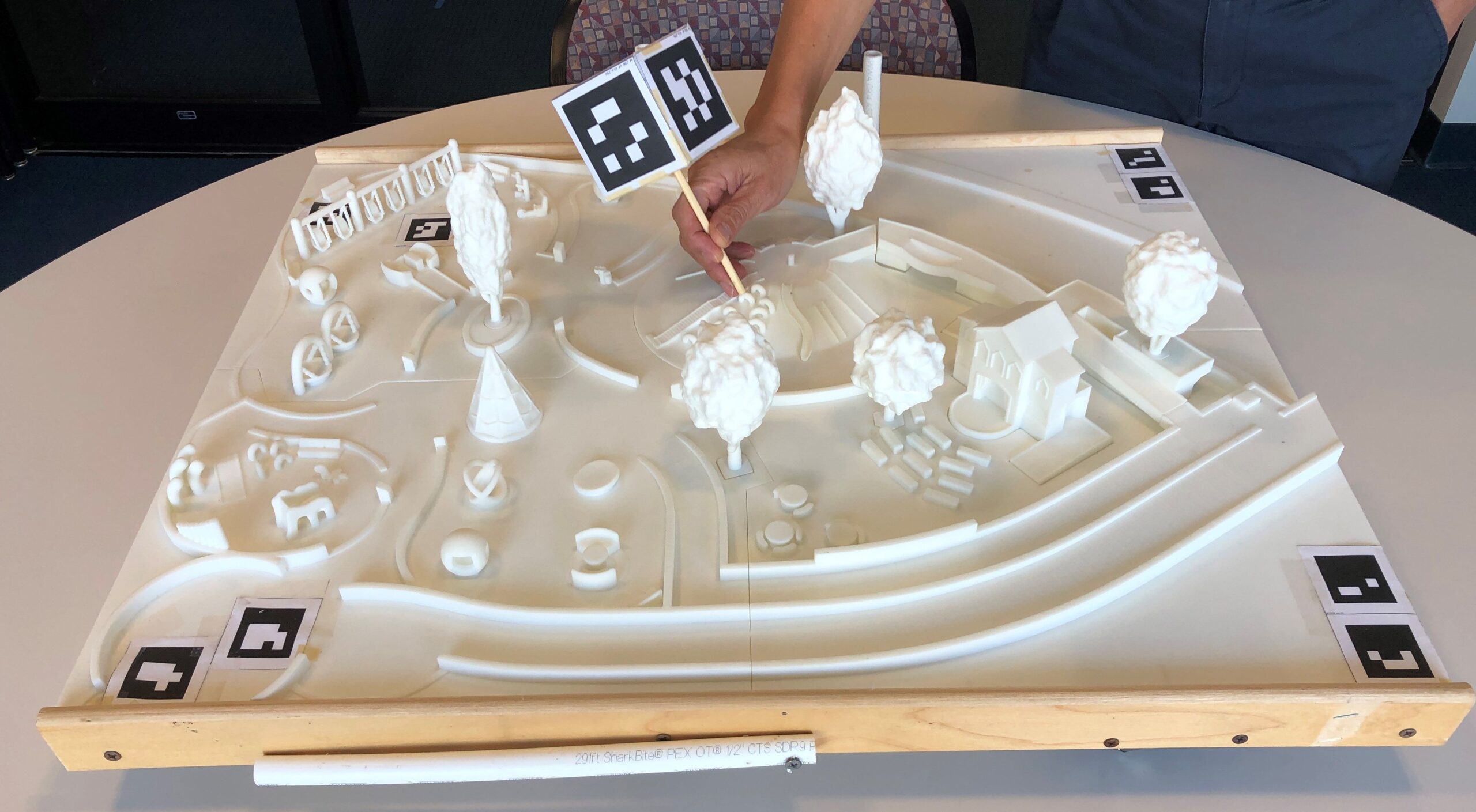

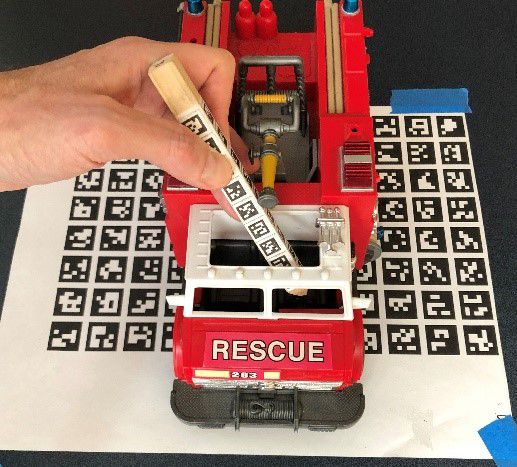

See a short video demonstration of CamIO here, showing how the user can trigger audio labels by pointing a stylus at “hotspots” on a 3D map of a playground. See the Magic Map project page for more information on this specific application of CamIO. Longer videos explaining how CamIO fits into the Magic Map approach are here: Camio by James Coughlan and CamIO by Brandon Biggs.

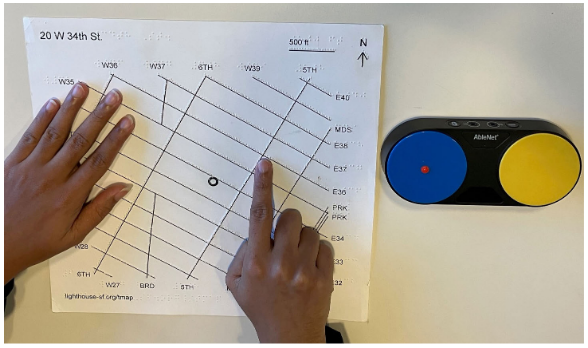

In collaboration with Dr. Sergio Mascetti, Dr. Dragan Ahmetovic and others at Univ. Milan, we have modified CamIO to function by tracking the user’s hands and fingers, eliminating the need for any stylus. One study, called CamIO Hands, allows the user to tap multiple times to elicit different types of information about a feature on a tactile graphic. A recent extension of this project, called MapIO, adds a conversational interface to CamIO applied to a tactile map, so that the user can ask questions about the map (such as “Where is the nearest Italian restaurant?”) and hear answers read aloud by the system, including real-time guidance to a desired destination on the map. Finally, the new CamIO-Web project allows the user to run CamIO in the browser on virtually any mobile, tablet or computer device. It includes two components: CamIO-Explorer, which allows the user to explore any flat tactile graphic, and CamIO-Creator, which is a tool that enables the annotation of any tactile graphic.

A CamIO-Web system has been installed in the Google Accessibility Discovery Center (ADC) in Milan, where it is used to add interactivity to a tactile map of the ADC space.

The CamIO project received the 2020 Dr. Arthur I. Karshmer Award for Assistive Technology Research. It was supported by a four-year grant from NIH/NEI (R01EY025332), which was renewed in 2022 for another four years (2R01EY025332-05A1). It has also received support from the NIDILRR RERC (grant numbers 90RE5024 and 90REGE0018).